Published on: November 20, 2015 by Scott S

Scenario:

CEPH- TECHNICAL ARCHITECTURE

We had seen the basic CEPH architecture and design principles in the previous part. Let us get into the technical architecture details directly.

The below diagram shows the very basic view of a Ceph storage architecture.

Ceph Storage Cluster

It is basically a reliable, easy to manage, distributed object storage cluster, that stores/handles data as objects.

Ceph Object Gateway

A powerful Amazon S3 and Swift compatible gateway that brings the power of Ceph Object Store to modern applications. If you want an application to communicate with the Ceph Object Store using objects, you do it via the Ceph Object Gateway.

Ceph Block Device

A distributed virtual block device that delivers high performance, cost effective storage for virtual machines and related applications. If you want a Virtual Machine/Disk to communicate with the Ceph Object Store, you do it using the Ceph Block Device gateway.

Ceph File System

A distributed, scale out file system with POSIX semantics that provides storage for legacy and modern applications. If you want files and directories to be able to communicate with the Ceph Object Store, you do it via the Ceph File System Gateway.

Note : All the data is stored on the same Object Store irrespective of the type of gateway used.

Below is a detailed technical diagram using technical names of components in the Ceph Storage Architecture.

Rados is reliable, autonomous distributed object store that is capable of or includes self healing, self managing and intelligent storage nodes (as discussed in Part-1). RADOS forms the entire storage cluster for Ceph. All the nodes that are configured as Ceph storage collectively forms the RADOS cluster. RADOS is the core layer and all other layers of Ceph are placed above it.

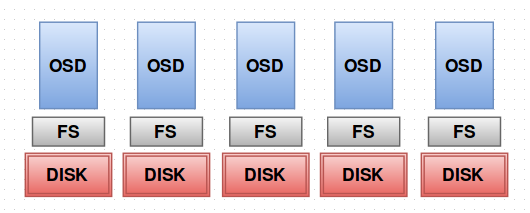

Let us assume a scenario of having five nodes or five disks that are to be configured as Ceph cluster.

The disks can be individual disks from each node or can even be raid groups. On top of these disks, File systems are implemented. Currently Ceph supports btrfs, ext4 and xfs file systems. Then on top of this file system a new software layer is added named OSD(Object Storage Daemon), to make the disk a part of the Ceph storage cluster.

The disks can be individual disks from each node or can even be raid groups. On top of these disks, File systems are implemented. Currently Ceph supports btrfs, ext4 and xfs file systems. Then on top of this file system a new software layer is added named OSD(Object Storage Daemon), to make the disk a part of the Ceph storage cluster.

OSD is a software or an application that turns that corresponding disk/node a part of Ceph cluster. While configuring an OSD we give a path, and that path is turned to a storage location that Ceph uses (cluster).

When you are interacting with a storage cluster, you interact with the entire cluster as a logical unit and not as individual nodes or storage locations.

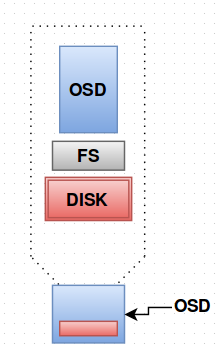

So, a disk having a file system and having OSD configured on top of it is collectively being called an OSD from now onwards.

A Ceph cluster will look like the one shown below. It has two different kind of members. OSD’s and Monitors(M)

OSD’s

As discussed, each blue and red box is an OSD and it makes a disk/node as a storage location available for Ceph cluster. It is the software that gives access to our data in the cluster. All the OSD’s collectively forms the Storage Cluster.

MONITORS

Monitors doesn’t form the part of storage cluster, as they are not performing any storing here. Instead they are integral part of Ceph by monitoring the OSD status and generating cluster map. It monitors OSDs and keeps track of who all are up who all are down, which all OSD’s are peering, the states of OSD’s etc. at a given point of time. Generally it acts a monitor of all the OSD’s in a storage cluster.

It’s better to have odd number of monitors and are limited in numbers. Some features of Monitors are given below

So this is RADOS and everything else in Ceph is built on top of this.

LIBRADOS is a group of libraries or an API that has the ability/functionality to communicate with the Ceph storage cluster (RADOS). Any application that needs to communicate with the Ceph storage cluster must do it via the librados API.

Only LIBRADOS is having direct access to the storage cluster. By default, Ceph Storage Cluster(RADOS) provides three basic storage services – Object Storage, Block Storage, File Storage. However Ceph has taken care that these are not the limits.

Using LIBRADOS API, you can create your own interface to access the storage cluster (RADOS) apart from the RESTful(RGW), Block(RBD) or POSIX(CephFS) semantics.

When an application wants to interact/talk to the Storage Cluster(RADOS) it is linked with LIBRADOS, which gives the application the necessary function/intelligence to interact with the storage cluster.

See diagram below:

LIBRADOS communicate with the RADOS using a native protocol. ie a socket designed solely for this purpose. Using a native protocol makes the communication between the LIBRADOS and the storage cluster very fast unlike using any Service Sockets or protocols.

The roles of LIBRADOS are as given below

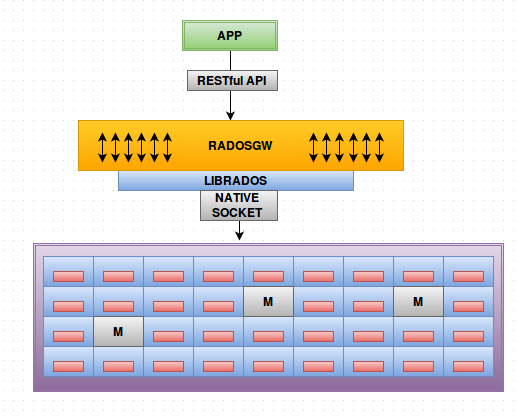

In order to use the Ceph cluster (RADOS) for object storage, the RADOS Gateway Daemon named radosgw is used.

It provides applications that uses RESTful APIs the functionality to use object storage architecture in storing data to the cluster (RADOS). It is an HTTP gateway based on REST API for the RADOS object store. The radosgw is a FastCGI module, and can be used along with any FastCGI capable webserver.

REST architecture involves reading a designated Web page that contains an XML file. The XML file describes and includes the desired content. REST typically runs over HTTP. When Web services use REST architecture, we call it RESTful APIs or REST APIs.

RADOS Object Storage architecture supports two interfaces

The RADOS Gateway sits between your application and the cluster (RADOS) as shown in the diagram below:

Any application that wants the data to be stored as objects on RADOS cluster uses radosgw.

Any application that wants the data to be stored as objects on RADOS cluster uses radosgw.

RADOSGW is linked with or is on top of the LIBRADOS, since only librados can speak with RADOS cluster and librados uses a native socket to communicate with the cluster.

The application is linked to RADOSGW using RESTful API’s. particularly Amazon S3 and Openstack SWIFT.

Ceph’s RADOS Block Devices (RBD) interact with OSD’s using kernel module KRBD or the librbd library that Ceph provides. KRBD provides block devices to a Linux hosts and librbd provides block storage to VM’s. RBD’s are most commonly used by virtual machines. It gives the virtualization environment added feature like migrating the VM across container on the go.

Let us consider many chunks of storage volume with size 10MB spread across different OSD’s in the RADOS cluster. What librbd does is, it gathers the chunks of space from RADOS and makes it a single block of storage (block storage) and make it available to the VM. One way to access RBD is to use the librbd library as shown below.

Here the small black boxes inside OSD’s are the little 10MB chunks of storage volume. What librbd does is, it links with the virtualization container and provides that as a single disk to the VM.

As in the image librbd is linked with LIBRADOS to get in communication with the RADOS cluster and also linked with the virtualization container. The librbd provides the virtual disk volume to the VM’s by linking itself the virtualization container.

The main advantage of this architecture is that, since the data or the image of a virtual machine is not stored on the container Node (its stored in RADOS cluster), we can easily migrate the VM’s by suspending it on one container and then again bringing it up on an another container on the fly as shown in the image below:

Another way to access RBD is to use the Kernel Module KRBD as shown below:

Using the KRBD module, you can make the block storage available for your Linux server or host. You see a block device similar to the one which is there for the HDD on a Linux host machine and you can easily mount it for use.

So in general, RBD provides the following features.

CEPHFS is a distributed file system which is POSIX- compliant, with a Linux kernel and supports file system on user space (FUSE). It allows data to be stored in files and directories as a normal file system does. It provides a traditional file system interface with POSIX semantics.

The growing technology uses object storage rather than a file system, but Ceph provides a legacy file system to the end user and storing the data as objects on the back end.

Till now the RADOS cluster had only two types of members ie; OSD’s and Monitors. But in this architecture a new member is added to the RADOS cluster, the METADATA server as shown in the diagram below.

When you mount the CEPHFS file system on a client, you need to talk to to the metadata server first for all the POSIX semantics like permissions, ownerships, timestamps, hierarchy of directories and files etc. And once the semantics are provided to the client by the metadata server, the OSD’s provide the data. The metadata server doesn’t handle any kind of data in any manner. It just stores the POSIX semantics for the data to be stored or retrieved.

The first configured Ceph metadata server stores all the POSIX semantics for all the OSD’s. As the number of metadata server increases, they split the load among themselves. So there is no single point failure even for the metadata servers in the cluster.

This is the detailed overview of all the components in a Ceph Storage Architecture.

In the next blog we can see how Ceph places its data in the cluster so efficiently that it does not even mess up once.

References

The diagrams and metaphors used are inspired by Inktank’s Vice president Ross Turk’s speech on introduction to CEPH

Recommended Reading :

Category : Linux

Add new commentSIGN IN