Published on: January 26, 2016 by Scott S

Scenario:

In this post we are focusing more on the implementation of Block Storage or OpenStack Cinder Service for the OpenStack setup on Ubuntu environment. As we had seen the controller and compute nodes are the core essentials of OpenStack and here is where a third new node comes into play apart from the controller and compute node. OpenStack calls is it the cinder node.In this architecture the controller node hosts all the required services for the block storage service and the cinder node actually stores data or serves the volume using the cinder-volume service.

A. Install the packages for block storage service using the following command

root@controller# apt-get install cinder-api cinder-scheduler

The block storage service uses MySQL as the database to store information. As mentioned earlier, you’ve to specify the location of the database in the cinder configuration file.

Add/modify the entry shown below in both /etc/cinder/cinder.conf files under the [database] section.

[database]connection = mysql://cinder:CINDER_DBPASS@controller/cinder

Do change the CINDER_DBPASS with the desired password for the cinder database user

B. Login to MySQL database as root in the controller node and create the glance database user

root@controller# mysql -u root -p mysql> CREATE DATABASE cinder; mysql> GRANT ALL PRIVILEGES ON cinder.* TO 'cinder'@'localhost' \ IDENTIFIED BY 'CINDER_DBPASS'; mysql> GRANT ALL PRIVILEGES ON cinder.* TO 'cinder'@'%' \ IDENTIFIED BY 'CINDER_DBPASS';

Remember to change the CINDER_DBPASS to match the one set in cinder configuration file.

C. Create the database tables for the Block Storage service (cinder) using the below command.

root@controller# su -s /bin/sh -c "cinder-manage db sync" cinder

D. Create a cinder user that the block storage service can use to authenticate with the Identity or Keystone Service

root@controller# keystone user-create --name=cinder --pass=CINDER_PASS --email=cinder@example.com

You can use your own CINDER_PASS and email option.

E. Use the service as the tenant group and give the cinder user the admin role using the keystone command

root@controller# keystone user-role-add --user=cinder --tenant=service --role=admin

Add/Modify the following entries in the cinder configuration file /etc/cinder/cinder.conf under the [keystone_authtoken] section as shown below.

[keystone_authtoken]

auth_uri = http://controller:5000

auth_host = controller

auth_port = 35357

auth_protocol = http

admin_tenant_name = service

admin_user = cinder

admin_password = CINDER_PASS

Edit the CINDER_PASS to match the one you set for the cinder user created using keystone –user-create command.

F. Configure the Block Storage Service to use RabbitMQ as the Message Broker

Edit the [DEFAULT] section in /etc/cinder/cinder.conf file, set these entries and replace RABBIT_PASS with the password you chose for RabbitMQ guest user:

[DEFAULT]

rpc_backend = rabbit

rabbit_host = controller

rabbit_port = 5672

rabbit_userid = guest

rabbit_password = RABBIT_PASS

Register the Block Storage Service with the Identity service and create the endpoints as shown below

root@controller# keystone service-create --name=cinder --type=volume --description="OpenStack Block Storage"

root@controller# keystone endpoint-create \

--service-id=$(keystone service-list | awk '/ volume / {print $2}') \

--publicurl=http://controller:8776/v1/%\(tenant_id\)s \

--internalurl=http://controller:8776/v1/%\(tenant_id\)s \

--adminurl=http://controller:8776/v1/%\(tenant_id\)s

Register a service and endpoint for Version 2 of the Block Storage service API.

root@controller# keystone service-create --name=cinderv2 --type=volumev2 --description="OpenStack Block Storage v2"

root@controller# keystone endpoint-create \

--service-id=$(keystone service-list | awk '/ volumev2 / {print $2}') \

--publicurl=http://controller:8776/v2/%\(tenant_id\)s \

--internalurl=http://controller:8776/v2/%\(tenant_id\)s \

--adminurl=http://controller:8776/v2/%\(tenant_id\)s

Restart the Block Storage Services for the changes to take effect

root@controller# service cinder-scheduler restart root@controller# service cinder-api restart

As mentioned this node stores or actually servers the data. OpenStack supports different types of storage systems. This tutorial uses LVM storage.

root@cinder# apt-get install lvm2

Create both physical and logical volumes using the pvcreate and lvcreate commands respectively as shown below.

root@cinder# pvcreate /dev/sda root@cinder# vgcreate cinder-volumes /dev/sda

Add/Edit the filter entry to the [devices] section in the /etc/lvm/lvm.conf file to keep LVM from scanning devices used by VM’s as shown below.

devices {

…

filter = [ “a/sda1/”, “a/sda/”, “r/.*/”]

…

}

Here sda is the volume to store the data using cinder-volume service and sda1 is the one where the Operating System data resides.

root@cinder# apt-get install cinder-volume

[keystone_authtoken]

auth_uri = http://controller:5000

auth_host = controller

auth_port = 35357

auth_protocol = http

admin_tenant_name = service

admin_user = cinder

admin_password = CINDER_PASS

The CINDER_PASS should match with the one in the cinder configuration file on the controller. Configure the cinder Node to use RabbitMQ on the controller node as the Message Broker by editing/modifying the [DEFAULT] section of the /etc/cinder/cinder.conf file as shown below

[DEFAULT]

…

rpc_backend = rabbit

rabbit_host = controller

rabbit_port = 5672

rabbit_userid = guest

rabbit_password = RABBIT_PASS

Make sure to replace the RABBIT_PASS with the RabbitMQ guest user password which you configured while configuring the message service on the controller node.

[database]

…

connection = mysql://cinder:CINDER_DBPASS@controller/cinder

You can chose your desired password for CINDER_DBPASS

In the [DEFAULT] section, configure the my_ip option, set it to the management IP address of the cinder node. This example uses 192.168.1.41 as the eth0 IP of the cinder node

[DEFAULT]

my_ip = 192.168.1.41

The Block Storage Service needs Image service glance to create and access bootable volumes.Add the glance_host variable to controller under the [DEFAULT] section of the /etc/cinder/cinder.conf file as shown below.

[DEFAULT]

glance_host = controller

Restart the Block Storage services with the new settings:root@cinder# service cinder-volume restart

root@cinder# service tgt restart

We can test the working of the cinder service by successfully creating a new cinder volume. Perform the following steps on the controller node.

Source the admin-openrc.sh file

root@controller# source admin-openrc.sh

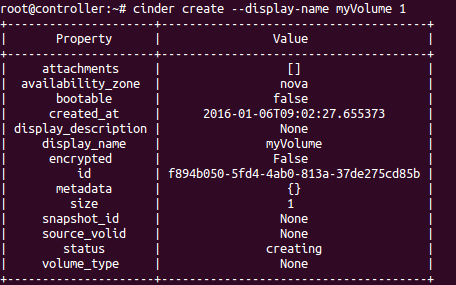

Use the cinder create command to create a new volume named myVolume1

root@controller# cinder create --display-name myVolume1

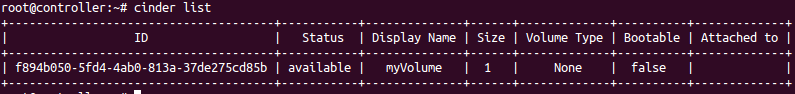

Make sure that the volume has been correctly created with the cinder list command:

root@controller# cinder list

The above command list the available volumes created with the status. If the status shown is not “available”, the volume creation has failed. Check the log files in the /var/log/cinder/ directory on the controller and cinder nodes to get more information about the failure.

The next part of this configuration deals with integrating cinder API with librbd so that the instances, volumes and images are stored on the CEPH Storage Cluster using Ceph Block Device (RBD) .

Recommended Readings

OpenStack Cloud Computing Fundamentals

OpenStack On Ubuntu – Part 1- Prerequisite Setup

OpenStack on Ubuntu – Part 2 – Identity or Keystone Service

OpenStack on Ubuntu – Part 3 – Image or Glance Service

OpenStack on Ubuntu – Part 4 – Compute or Nova Service

OpenStack on Ubuntu – Part 5 – Dashboard or Horizon Service

OpenStack integration With CEPH Block Device (RBD)

Category : Howtos, Linux

Add new commentSIGN IN